SMS Blog

Extending Kubernetes with Kyverno

Kubernetes is an extremely powerful orchestration platform with no shortage of features, however in cases where the native feature set is missing certain desired functionality, dynamic admission controllers can provide a mechanism to address those shortcomings. Kyverno is a free and open source policy engine that functions like a “Swiss-army-knife” for Kubernetes, allowing for extending feature sets, implementing security policy, assisting multi-tenancy, automation, and configuration control. In this blog I’ll outline the functionality of Kyverno as well as provide some examples for how Kyverno can address each of these ideas.

Kubernetes Admission Controllers

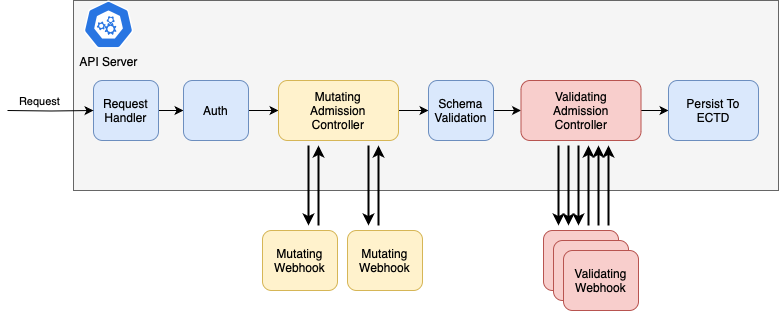

Admission controllers are a native feature in Kubernetes that intercept requests sent to the Kubernetes API, and either validate or modify those requests. The Kubernetes API is the process responsible for authorizing, validating, and returning all requests for creating, deleting, updating, and reading resources persisted to the cluster. These resources include pods, volumes, services, and all other resources that compose a running cluster. For any application desired to run on the cluster, a number of requests are sent to the API to create and persist those resources. In order to exert some control over those resources, admission controllers are positioned to intercept and validate the requests as they are sent to the API.

There are two types of admission controllers: validating and mutating. Validating admission controllers are much like they sound — they validate an API request and return either an accept or deny response. Validating controllers can be used both to implement customized policy desired by an administrator and also to validate any non-schema-violating issues within the request. Mutating admission controllers provide the same, with the added ability to modify or mutate the request in flight.

Multiple admission controllers may act on any request, however validating admission controllers are always called after mutating admission controllers. When multiple mutating controllers are called, they are called sequentially to prevent non-deterministic final mutation results. When multiple validating controllers are called, they are called in parallel for efficiency.

Kubernetes includes several built-in admission controllers which implement various tailored functions. These include mutating controllers for setting default classes on resources that did not otherwise specify one (e.g DefaultIngressClass, DefaultStorageClass) as well as validating controllers for enforcing policy and constraints in the cluster (e.g CertificateSubjectRestriction, PodSecurity). The extensibility of admission controllers is implemented via the ValidatingAdmissionWebhook and MutatingAdmissionWebhook admission controllers. These controllers send HTTP callbacks to external processes, which perform the validating and mutating behavior. The processes that implement these webhooks are known as dynamic admission controllers.

Kyverno’s core functionality is implemented though dynamic admission controllers. While many Kubernetes-native applications utilize dynamic admission controllers, they are most often utilized for the purpose of validating resources and implementing features specific to that application. Kyverno provides a generic policy framework for administrators to define custom policies as Kubernetes resources, and then executes those policies within the dynamic admission controller.

Kyverno Basics

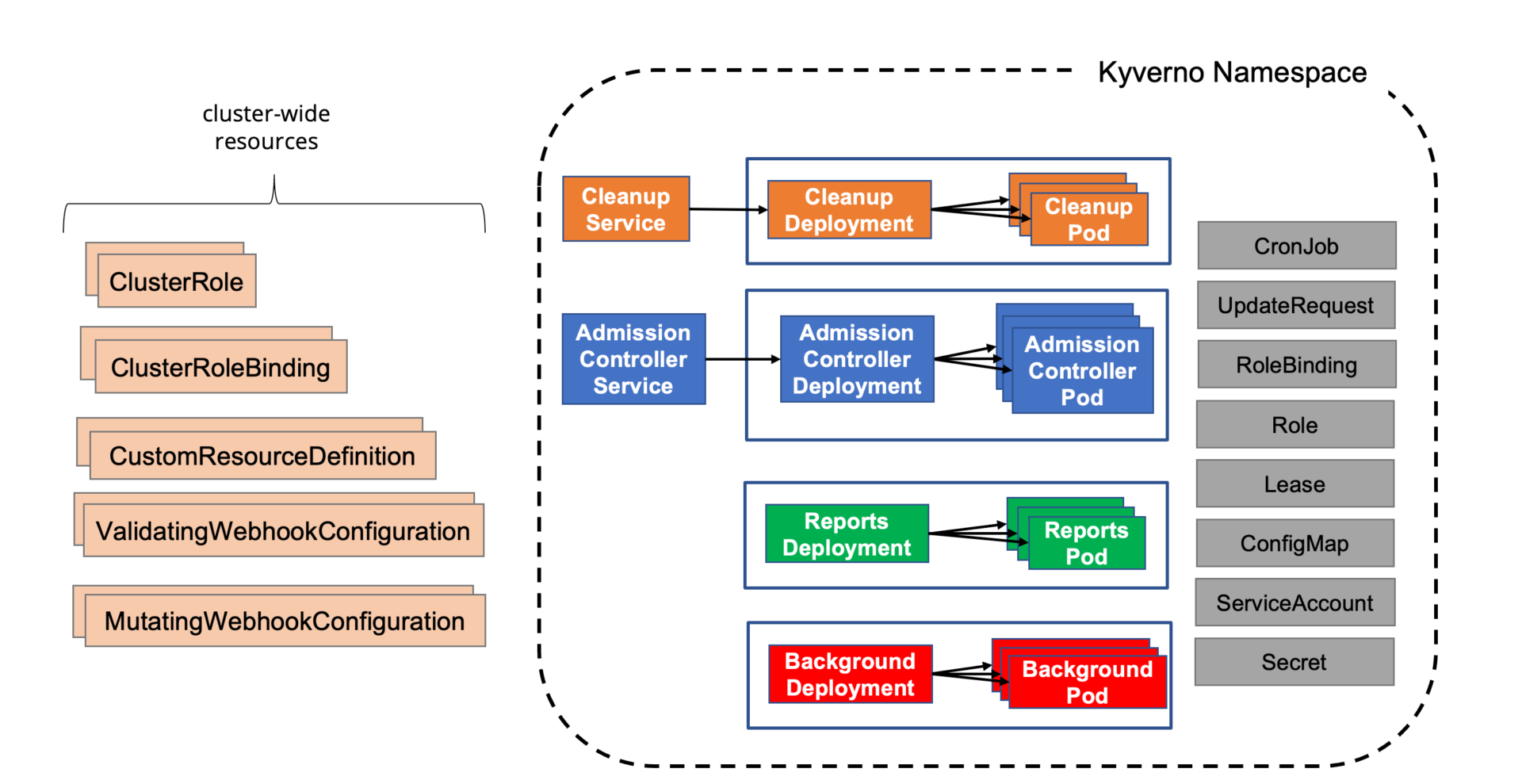

Kyverno can be installed in a cluster either through Helm or via manifest, as outlined in the Kyverno installation instructions. It consists of several components, including some optional, but most importantly contains the admission controller deployment, which handles the webhook callbacks from the Kubernetes API admission controllers.

The configuration of admission policies in Kyverno is through the definition of Kyverno policy custom resources. Kyverno policies define the selection of applicable resources and the rules for validating or mutating those resources. These are organized as a set of sequential rules, each with at the most basic, a match declaration, followed by a validate or mutate declaration.

The following policy matches requests related to a Kubernetes deployment and rejects the request if it does not have a particular label applied to the resource.

apiVersion: kyverno.io/v1

kind: ClusterPolicy

metadata:

name: require-tenant-label

spec:

rules:

- name: require-tenant-label

match:

any:

- resources:

kinds:

- apps/v1/Deployment

validate:

failureAction: Enforce

message: "Deployments must have label `tenant` defined."

pattern:

metadata:

labels:

tenant: "*"

Matching

Selecting which resources to include in the policy is achieved through the use of a match statement in the policy rule, which filters the request based on a variety of criteria, including the kind of resource, the name of the resource, and the operation for the resource. Additional options for matching include the use of namespace selectors, label selectors, annotations, and wildcards.

In the following match block, any request to CREATE or UPDATE a deployment resource in the dev namespace will result in a successful match for the rule.

match:

any:

- resources:

kinds:

- apps/v1/Deployment

namespace:

- dev

operations:

- CREATE

- UPDATE

Preconditions

Precondition statements further narrow the selected resources by providing access to a more complex selection features, which include variables, operators, external resources, and data-filtering with JMESPath. The separation of this filtering from the initial match declaration helps reduce load and unnecessary computation for resources that don’t pass the first set of match criteria.

In the following precondition, the matched deployment is further filtered to only include those which have the pod template configured with nvidia as the runtime class.

preconditions:

all:

- key: "{{ request.object.spec.template.spec.runtimeClassName }}"

operator: Equals

value: nvidia

Validation

Validation statements implement the function of a validating webhook and check for the criteria intended to be validated. A basic validation will include a pattern clause, which is the validation rule. In the following validation, the matched deployment is validated to ensure that only a single replica is allowed.

validate:

failureAction: Enforce

message: "Pods using the `nvidia` runtimeclass may not contain replicas."

pattern:

spec:

replicas: "1"

Validation logic can also perform more complex evaluation through the use of Common Expression Language statements.

In the following validation, the matched deployment is validated to ensure that the sum of all GPU limits for all containers in the pod spec do not exceed 2.

validate:

failureAction: Enforce

cel:

expressions:

- message: Pods using the `nvidia` runtimeclass cannot request more than 2 GPU.

expression: "object.spec.template.spec.containers.filter(x, 'gpu' in x.resources.limits).map(x, int(x.resources.limits.gpu)).sum() <= 2"

Mutations

Mutate statements implement the function of a mutating webhook and define how to modify the matched object of the request. They may also modify other desired objects other than the one subject of the request. These mutations are defined as patches, either RFC 6902 JSON Patches or Strategic Merge Patches.

In the following mutation, the matched deployment is patched with a JSON patch to set the scheduler used by the pod spec to gpu-scheduler.

mutate:

patchesJson6902: |-

- path: "/spec/template/spec/"

op: add

value: |

schedulerName: gpu-scheduler

In the following mutation, a resource other than the one subject to the request is mutated. These resources are matched under the targets block of the mutate rule. A config-map that holds a key-value store is patched to add information from the resource request.

mutate:

targets:

- apiVersion: v1

kind: ConfigMap

name: gpu-tracker

namespace: default

patchStrategicMerge:

spec:

data:

"{{request.object.metadata.namespace}}-{{request.object.metadata.name}}": nvidia

Generation

Generate statements are used to create new resources during the admission process. The generate rule contains a definition of the resource to be created. In the following generate statement, the matched deployment creates a persistent volume claim to be used as a cache drive. Note: the binding of this volume to the pod is still necessary, which can occur ahead of time in the pod spec or via a further mutation.

generate:

apiVersion: v1

kind: PersistentVolumeClaim

name: "{{request.object.metadata.name}}-cache"

namespace: "{{request.object.metadata.namespace}}"

data:

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

volumeMode: Filesystem

Variables

Variables may be defined in Kyverno policies to help store, process, and reuse references to data within the request or pulled externally. Reusable variable definitions are stored under a context statement. Variables provide the ability to use JMESPath to filter and manipulate the data of the request and may be used within all blocks of a policy rule excluding match and exclude blocks. Variables can also pull information from other objects in the cluster, and can create API calls to the Kubernetes API or other external data sources. The combination of variables, JMESPath, and API calls, allow for exceptionally flexible and customized policies and behavior. Variables are referenced elsewhere in policies by enclosing inside double brackets.

In the following context block, a variable for scheduler_name is extracted in-line from the request object. The variable gpus_requested is extract by first storing the containers array under the key containers and then performing a JMESPath query on the containers array to sum all of the GPU requests from all containers in the pod before assigning that sum to the variable. The variable nvidia_runtime_deployment_count is created by first making a read request to the Kubernetes API to find all deployments in the same namespace, filtering only those whose pod spec uses the nvidia runtime class, and counting those filtered deployments.

context:

- name: scheduler_name

variable:

value: "{{request.object.spec.template.spec.schedulerName}}"

- name: gpus_requested

variable:

value:

containers: "{{ request.object.spec.template.spec.containers }}"

jmesPath: "sum(containers[?resources.limits.gpu != `null`].resources.limits.gpu)"

- name: nvidia_runtime_deployment_count

apiCall:

urlPath: "/apis/apps/v1/{{ request.namespace }}/deployments"

jmesPath: "items[?spec.template.spec.runtimeClassName == 'nvidia'] | length(@)"

Example Policies

Having covered the basics on Kyverno policy definitions, the following are several examples of custom policies that I have developed for implementing security, automation, and feature requirements.

Security / Policy

In the following policy definition, we enforce a security requirement that all ingress resources created inside the dev/test namespaces must utilize the internal-only ingress controller, and that the hostnames within the ingress not be synced to the public facing DNS service.

Rules

- Match all ingress resources in the dev/test namespaces and fail validation if the ingress class is not set to internal-nginx.

- Match all ingress and service resources in the dev/test namespaces and fail validation if they contain the annotation external-dns.alpha.kubernetes.io/public: “true”. This annotation is used by the external-dns controller to update the public facing DNS server.

apiVersion: kyverno.io/v2beta1

kind: ClusterPolicy

metadata:

name: require-internal-ingress-and-dns

spec:

rules:

- name: require-internal-ingress

match:

any:

- resources:

kinds:

- networking.k8s.io/v1/Ingress

namespaces:

- dev

- test

validate:

failureAction: Enforce

message: "Dev/test ingress must use internal ingress controller."

pattern:

spec:

ingressClassName: internal-nginx

- name: prohibit-public-external-dns

match:

any:

- resources:

kinds:

- networking.k8s.io/v1/Ingress

- Service

namespaces:

- dev

- test

validate:

failureAction: Enforce

message: "Dev/test ingress and service cannot use public external-dns controller."

pattern:

metadata:

annotations:

external-dns.alpha.kubernetes.io/public: "!true"

Automation / Configuration Management

In the following policy definition, we implement an automated method for adding PVC backup/restore functionality by dynamically creating the resources needed. In this example, the volsync controller is used to create and export PVC snapshots to an S3-compatible storage server, using the restic backup-utility. Volsync is also used to populate the PVC with the latest snapshot during initial provisioning. This requires 3 additional resources to be created: a secret with the restic repository information, a volsync ReplicationSource, and a volsync DestinationSource. Kyverno rules are (mostly) limited to performing a single operation (validate, mutate, generate) and for a single resource within a single rule, thus this requires several rules to create and modify the resources needed.

The automation should be triggered whenever a PVC is created with the “kyverno.volsync.target: minio” annotation.

Rules

- Match the annotated PVC and clone the templated restic repository secret.

- Match the annotated PVC and generate a volsync ReplicationSourceresource.

- Match the annotated PVC and generate a volsync ReplicationDestinationresource.

- Match the cloned repository secret from step 1, and mutate the repository destination, which is based on the name of the PVC.

- Match the annotated PVC and mutate it to add the ReplicationDestination as the data-source for populating the volume with the latest snapshot.

apiVersion: kyverno.io/v2beta1

kind: ClusterPolicy

metadata:

name: create-volsync-pvc

spec:

generateExisting: true

rules:

- name: clone-restic-secret

match:

any:

- resources:

kinds:

- PersistentVolumeClaim

annotations:

kyverno.volsync.target: "minio"

generate:

synchronize: true

apiVersion: v1

kind: Secret

name: "{{request.object.metadata.name}}-volsync-minio-secret"

namespace: "{{request.object.metadata.namespace}}"

clone:

namespace: storage

name: volsync-minio-secret

- name: create-volsync-src

match:

any:

- resources:

kinds:

- PersistentVolumeClaim

annotations:

kyverno.volsync.target: "minio"

generate:

synchronize: true

apiVersion: volsync.backube/v1alpha1

kind: ReplicationSource

name: "{{request.object.metadata.name}}-src"

namespace: "{{request.object.metadata.namespace}}"

data:

spec:

sourcePVC: "{{request.object.metadata.name}}"

trigger:

schedule: "0 */6 * * *"

restic:

copyMethod: Snapshot

pruneIntervalDays: 7

repository: "{{request.object.metadata.name}}-volsync-minio-secret"

volumeSnapshotClassName: '{{request.object.metadata.annotations."kyverno.volsync.snapshotclass" || `null` }}'

cacheCapacity: 10Gi

cacheStorageClassName: openebs-hostpath

cacheAccessModes: ["ReadWriteOnce"]

accessModes: "{{request.object.spec.accessModes}}"

moverSecurityContext:

runAsUser: '{{to_number(request.object.metadata.annotations."kyverno.volsync.uid") || `568` }}'

runAsGroup: '{{to_number(request.object.metadata.annotations."kyverno.volsync.gid") || `568` }}'

fsGroup: '{{to_number(request.object.metadata.annotations."kyverno.volsync.gid") || `568` }}'

retain:

hourly: 168

daily: 30

weekly: 16

monthly: 12

- name: create-volsync-dest

match:

any:

- resources:

kinds:

- PersistentVolumeClaim

annotations:

kyverno.volsync.target: "minio"

generate:

synchronize: true

apiVersion: volsync.backube/v1alpha1

kind: ReplicationDestination

name: "{{request.object.metadata.name}}-dst"

namespace: "{{request.object.metadata.namespace}}"

data:

spec:

trigger:

manual: restore-once

restic:

repository: "{{request.object.metadata.name}}-volsync-minio-secret"

copyMethod: Snapshot

volumeSnapshotClassName: '{{request.object.metadata.annotations."kyverno.volsync.snapshotclass" || `null` }}'

cacheStorageClassName: openebs-hostpath

cacheAccessModes: ["ReadWriteOnce"]

cacheCapacity: 10Gi

accessModes: "{{request.object.spec.accessModes}}"

capacity: "{{request.object.spec.resources.requests.storage || '1Gi'}}"

moverSecurityContext:

runAsUser: '{{to_number(request.object.metadata.annotations."kyverno.volsync.uid") || `1000` }}'

runAsGroup: '{{to_number(request.object.metadata.annotations."kyverno.volsync.gid") || `1000` }}'

fsGroup: '{{to_number(request.object.metadata.annotations."kyverno.volsync.gid") || `1000` }}'

- name: mutate-restic-secret

match:

any:

- resources:

kinds:

- Secret

selector:

matchLabels:

generate.kyverno.io/rule-name: clone-restic-secret

context:

- name: pvc_name

variable:

value: "{{request.object.metadata.name | replace(@, '-volsync-minio-secret', '', `1`)}}"

mutate:

patchStrategicMerge:

data:

RESTIC_REPOSITORY: "{{base64_encode('s3:<http://minio.storage.svc.cluster.local:9000/restic/{{pvc_name}>}')}}"

- name: mutate-volsync-pvc

match:

any:

- resources:

kinds:

- PersistentVolumeClaim

annotations:

kyverno.volsync.target: "minio"

mutate:

patchStrategicMerge:

spec:

dataSourceRef:

kind: ReplicationDestination

apiGroup: volsync.backube

name: "{{request.object.metadata.name}}-dst"

Feature Extensibility

In the final example, we use a Kyverno policy to extend a feature not otherwise available natively in Kubernetes. Pods can be configured to generate ephemeral PVCs that are provisioned when the pod starts, and destroyed when the pod stops, generally to be used for scratch space, and where a tmpfs may not be appropriate. The name of the generated volume is always of the format <pod-name>-<volume-name>. This means when the pod is defined as part of a deployment, the volume name will have a “randomized” component consistent with the pod name received from the replica set controller. I had the requirement that a pod would create an ephemeral volume, but that other pods would be able to mount this volume. Without being able to know the name of the volume in advance, this was not possible natively in Kubernetes.

Using a Kyverno policy, we can dynamically mutate the necessary resources to create this feature. The binding of the ephemeral volumes to external pods is configured by matching annotations added to the ephemeral PVCs and to the deployment containing the target pod. When the PVC is created, it receives an annotation: “kyverno.pvc.mount=<pvc-common-name1>” and a matching deployment would have an annotation listing the common names for all of the ephemeral PVCs it needs to mount: “kyverno.pvc.mount=<pvc-common-name1>,<pvc-common-name2>”. The target pod definition must already contain the volume-mount configuration for the eventual PVC.

Rules

- Match on the creation of new PVCs with the stated annotation and then find all deployments in the same namespace that contain an annotation matching the PVC. Then mutate the deployment to have the volume mount reference the claim name for the newly created PVC. This rule handles the case of when the matching deployment already exists when ephemeral PVC is created.

- Match on the creation or update of deployments with the stated annotation and then send an API call to find all PVCs in the same namespace, and filter to match those listed in the annotation. For each found PVC, mutate the deployment to update the volume mount claim with the name of the ephemeral PVC. This rule handles the case for when the ephemeral PVC already exists prior to the relevant deployment.

apiVersion: kyverno.io/v2beta1

kind: ClusterPolicy

metadata:

name: dynamic-mount-pvc

spec:

rules:

- name: pvc

match:

any:

- resources:

kinds:

- PersistentVolumeClaim

operations:

- CREATE

annotations:

kyverno.pvc.mount: "*"

context:

- name: pvc_name

variable:

jmesPath: request.object.metadata.annotations."kyverno.pvc.mount"

mutate:

targets:

- apiVersion: apps/v1

kind: Deployment

namespace: "{{ request.namespace }}"

preconditions:

all:

- key: "{{ pvc_name }}"

operator: In

value: '{{target.metadata.annotations."kyverno.pvc.mount" || '''' | split(@,'','')}}'

- key: '{{target.spec.template.spec.volumes.name."{{ pvc_name }}".persistentVolumeClaim.claimName || ''''}}'

operator: NotEquals

value: "{{ request.object.metadata.name }}"

patchStrategicMerge:

spec:

template:

spec:

volumes:

- name: "{{ pvc_name }}"

persistentVolumeClaim:

claimName: "{{ request.object.metadata.name }}"

#when a deployment is created or modified, patch the referenced volumes with the found (or not-found) PVCs

- name: deployment

match:

any:

- resources:

kinds:

- apps/v1/Deployment

operations:

- CREATE

- UPDATE

annotations:

kyverno.pvc.mount: "*"

context:

- name: pvcs

apiCall:

urlPath: "/api/v1/namespaces/{{request.namespace}}/persistentvolumeclaims"

jmesPath: 'items[?metadata.annotations."kyverno.pvc.mount" != `null`].{name: metadata.name, pvc_name: metadata.annotations."kyverno.pvc.mount"}'

mutate:

foreach:

- list: 'request.object.metadata.annotations."kyverno.pvc.mount" | split(@,'','')'

patchStrategicMerge:

spec:

template:

spec:

volumes:

- name: "{{ element }}"

persistentVolumeClaim:

claimName: "{{pvcs[?pvc_name=='{{element}}'] | [0].name || 'placeholder'}}"

Further Reading

The complete feature-set, options, knobs, and criteria for writing Kyverno policies is well outside the scope of this blog. However, it is well outlined in the Kyverno documentation. The documentation includes additional considerations for RBAC, compatibility with GitOps tools, tracing, and reporting.

Kyverno also provides a playground for testing policies prior to deployment into a cluster. This can be immensely useful for debugging and validating custom policies.

Finally, Kyverno hosts a community-maintained library of custom policies. At the time of writing, this contains over 400 policies for implementing a variety of validating and mutation needs.